How Audio AI Agents Are Cheating in Technical Interviews (and How to Spot It)

In 2024, recruiters worried about candidates copy-pasting coding challenges into ChatGPT. By 2025, we worried about "copilot" extensions overlaying answers on screens.

Now, in 2026, the threat has evolved again, and it’s invisible.

Audio AI Cheating is the latest frontier in interview fraud. It allows candidates to sit in front of a camera, hands visible, looking directly at you, while an AI agent listens to your questions and feeds the perfect answer directly into their ear.

For technical recruiters and hiring managers, this changes the game. If you rely on standard video calls for your technical screens, your "gut feeling" is no longer enough. Here is how this technology works, the red flags to watch for, and how to secure your hiring funnel.

What is Audio AI Cheating?

Definition: Audio AI cheating occurs when a candidate uses a real-time voice-processing tool to capture the interviewer's speech. The tool transcribes the question, queries an LLM (like GPT-4 or Claude), and uses text-to-speech to "whisper" the answer back to the candidate via an earpiece, all in seconds.

Unlike previous cheating methods, this requires zero typing. The candidate doesn’t need to look away from the camera or use a second monitor. To an untrained eye, it looks like a smooth, highly knowledgeable conversation.

3 Signs Your Candidate Is Using an Audio Agent

Because the technology relies on a "Listen → Process → Speak" loop, it leaves behind specific behavioral artifacts. Here is how to spot them.

1. The "Processing" Latency

Even the fastest AI models have a delay. If you ask a conversational question like, "What are the trade-offs between using an ALB versus an NLB?", a real human usually has an immediate verbal reaction (a nod, a "hmm," or a filler word).

- The Cheat: The candidate sits in absolute silence for 3-5 seconds after you finish speaking, then suddenly begins a perfectly structured answer.

- Why: They are waiting for the AI to finish reading the answer into their ear.

2. The "Transcription" Loop

Audio AI agents sometimes mishear the interviewer. If the AI misses a word, it can't generate an answer.

- The Cheat: The candidate constantly asks you to repeat the question, even when the connection is clear. Or, they oddly repeat your question out loud word-for-word.

- Why: Repeating the question out loud gives the AI a second chance to "hear" the input clearly through the candidate's own microphone.

3. The "Textbook" Tone

LLMs are trained on documentation, not conversation.

- The Cheat: The candidate uses phrasing that sounds like a Wikipedia article. They might use words like "Furthermore," "In conclusion," or "It is crucial to note that..." in casual conversation.

- Why: They are verbatim repeating what the voice in their ear is saying. They often lack the emotional intonation of someone recalling a messy, real-world engineering war story.

Why Traditional Video Interviews Are Failing

Many engineering teams rely on Zoom or Google Meet for the "culture fit" or "technical discussion" round. The problem is that these platforms are designed for communication, not security. They cannot detect:

- Hidden browser tabs running audio capture.

- Virtual audio cables routing your voice to an AI.

- The difference between a candidate looking at you vs. looking at a translucent teleprompter overlay.

How to Stop It (Without Being Draconian)

You don’t need to ban headphones or force candidates to interview blindfolded. The solution is moving from observation to interactive assessment.

1. Switch to Abstract Problem Solving AI struggles with ambiguity. Instead of asking "What is the syntax for a React Hook?", ask: "Tell me about a time a database migration failed and how you fixed it." Personal experience is harder for an AI to fabricate in real-time without sounding generic.

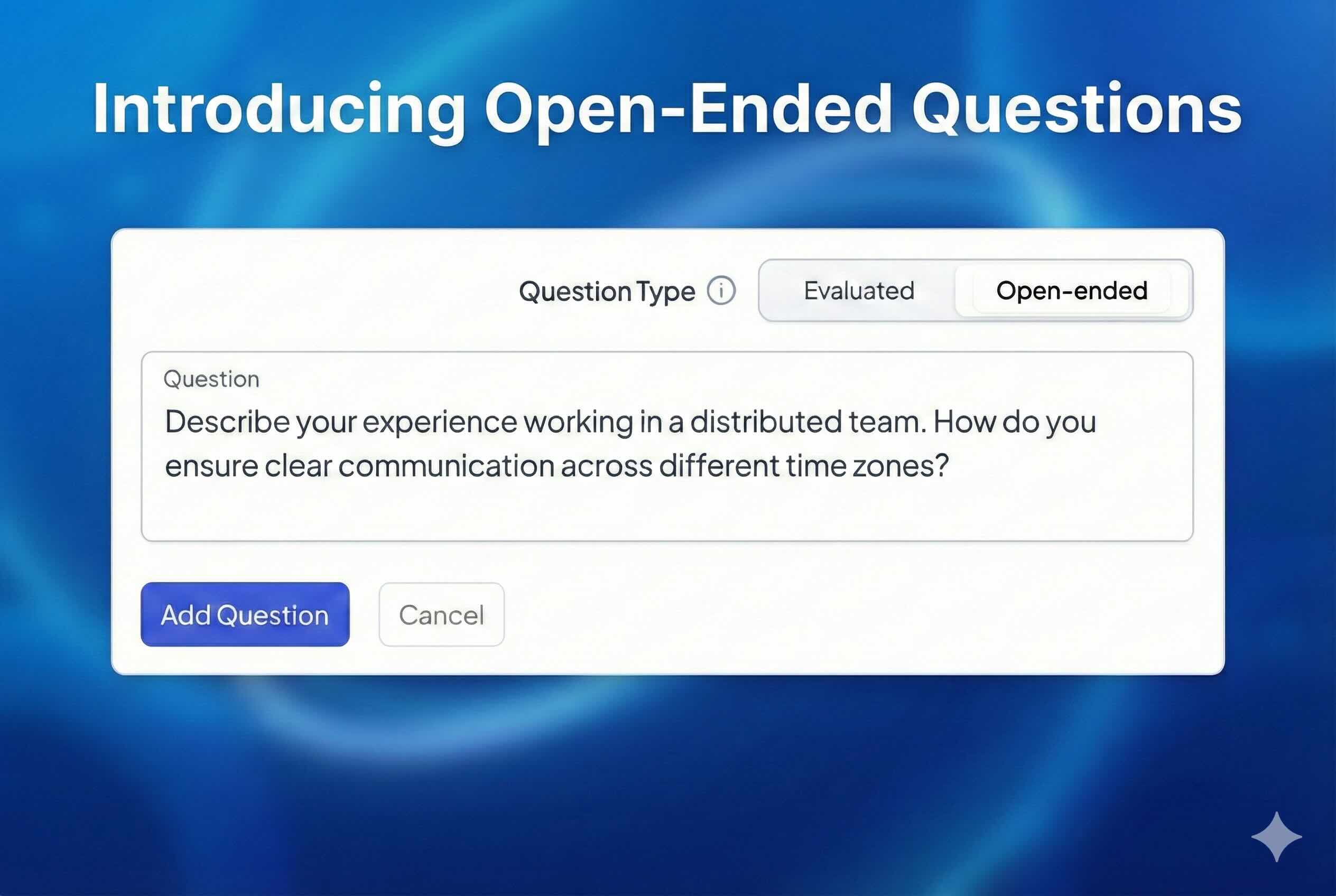

2. Use a Purpose-Built Platform Stop using generic video tools for technical rounds. Platforms like EvoHire are designed to flag anomalies that human eyes miss. By analyzing browser focus, copy-paste events, and interview metadata, you can distinguish between a brilliant engineer and a brilliant prompter.

3. The "Interrupt" Test AI agents hate being interrupted. If you suspect a candidate is reading a script (or listening to one), politely interrupt them mid-sentence with a clarifying detail.

- Human: Stops, processes the new info, and pivots.

- Cheater: Often stumbles, loses their place, or continues talking over you because the AI hasn't stopped speaking in their ear yet.

Conclusion

As AI tools become faster, the line between a candidate's skill and their tools will blur. However, integrity is a non-negotiable engineering skill. By updating your interview process to catch these new "audio" cheats, you ensure you're hiring the engineer and not the bot.

FAQ

Q: Can anti-cheat software detect Audio AI?

Most standard proctoring software cannot detect Audio AI because it runs on the system audio level or external devices. However, advanced platforms like EvoHire use behavioral analysis to flag the specific latency and audio patterns associated with this type of cheating.

Q: Is it legal to record interviews to check for cheating?

Yes, provided you obtain consent. Most modern interviewing platforms include consent forms where candidates agree to be recorded for review purposes.

Ready to Accelerate Your Hiring Velocity?

Leverage AI agents to eliminate false-positive interviews and save valuable engineering time